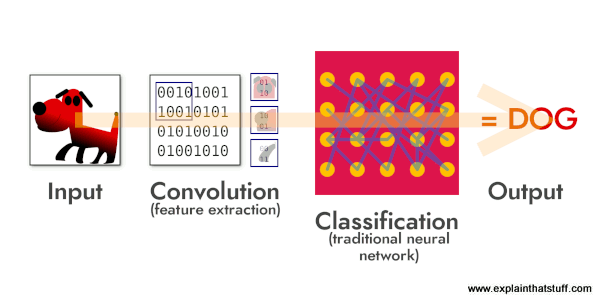

In this notebook, we will train a simple Convolutional Neural Network (CNN) on the CIFAR-10 dataset. CIFAR-10 is composed of 60,000 32x32 color images in 10 classes (such as airplane, car, bird, cat, etc.). You can imagine an IoT device (like a Raspberry Pi + camera) capturing images and either classifying them on the device itself (edge computing) or sending them to the cloud for classification. This small-scale example demonstrates how a CNN learns to recognize different objects in images.

1. Imports and Dataset Loading

We start by importing the necessary libraries and loading the CIFAR-10 dataset from Keras.

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

import matplotlib.pyplot as plt

import numpy as np

# Load CIFAR-10 dataset

(x_train, y_train), (x_test, y_test) = datasets.cifar10.load_data()

# Normalize pixel values

x_train = x_train.astype('float32') / 255.0

x_test = x_test.astype('float32') / 255.0

print("Training data shape:", x_train.shape)

print("Testing data shape:", x_test.shape)

2. Visualizing the Data

Let's quickly look at a few samples from the dataset to get a feel for the images we're dealing with.

class_names = ["airplane","automobile","bird","cat","deer",

"dog","frog","horse","ship","truck"]

# Show first 5 images

fig, axes = plt.subplots(1, 5, figsize=(10, 2))

for i in range(5):

axes[i].imshow(x_train[i])

label = y_train[i][0] # because y_train[i] is [label]

axes[i].set_title(class_names[label])

axes[i].axis('off')

plt.show()

3. Building the CNN Model

We will construct a simple CNN with two convolutional layers, followed by pooling layers, and finally some fully connected layers to perform classification.

model = models.Sequential()

# First convolutional block

model.add(layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu', input_shape=(32, 32, 3)))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# Second convolutional block

model.add(layers.Conv2D(filters=64, kernel_size=(3, 3), activation='relu'))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

# Flatten the feature maps

model.add(layers.Flatten())

# Fully connected layers

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(10, activation='softmax')) # 10 classes in CIFAR-10

model.summary()

4. Compiling and Training the Model

We choose categorical_crossentropy as our loss function and adam as our optimizer, then train for a few epochs.

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(x_train, y_train, epochs=5,

validation_data=(x_test, y_test),

batch_size=64)

5. Evaluating the Model

We evaluate how well the CNN performs on the test set.

test_loss, test_acc = model.evaluate(x_test, y_test, verbose=2)

print(f"Test accuracy: {test_acc:.2f}")

6. Making Predictions

Let's check a few predictions on test images. In an IoT context, these images might come from a remote camera, and the model could classify them on the edge device or via a cloud service.

predictions = model.predict(x_test[:5])

predicted_labels = np.argmax(predictions, axis=1)

fig, axes = plt.subplots(1, 5, figsize=(10, 2))

for i in range(5):

axes[i].imshow(x_test[i])

axes[i].set_title("Pred: " + class_names[predicted_labels[i]])

axes[i].axis('off')

plt.show()

7. Summary and IoT Integration

Our CNN can classify CIFAR-10 images with a certain level of accuracy after just a few epochs. In an IoT scenario, similar models could be deployed on small devices like a Raspberry Pi (e.g., using TensorFlow Lite) or used in the cloud for real-time image classification. This example demonstrates how convolutional layers extract features from images, and how pooling layers reduce spatial size while keeping the most important information.

Ideas for IoT Integration:

- Convert this model to TensorFlow Lite to run on edge devices with limited resources.

- Use a camera-equipped device (Raspberry Pi or other SBC) to capture real-time images and classify them locally.

- Send the images or classification results to a remote server for centralized monitoring or logging.